Kalray Data Acceleration Cards

for High-Performance Storage

and Compute

Kalray K200 & TURBOCARD4

Efficient support of data

intensive workloads

AI acceleration: Up to 25 TFLOPs

(16 bits)/50 TOPs (8 bits)

Best performance

per watt and per $

Power consumption as low

as 30W

Unprecedented

programmability

featuring open and pluggable architectures

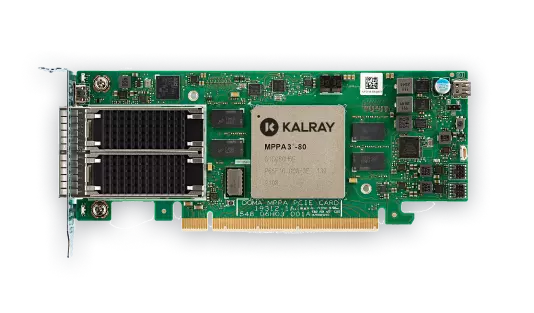

Kalray Acceleration Cards

Coolidge is the third generation of Kalray’s MPPA DPUs. They offer an unprecedented level of performance and programmability. It is a game changer for data-centric heterogenous processing tasks as it significantly increases the performance per Watt and per dollar. Kalray DPUs are engineered to strike a balance between performance and power consumption, providing organizations with optimized power usage and energy efficiency for their data processing workloads. The energy-efficient MPPA architecture maximizes energy efficiency without compromising processing capabilities. Kalray DPUs are featured in Kalray’s Storage and Compute Acceleration Cards.

K200 Storage Acceleration Cards

K200 is Kalray’s family of data-centric acceleration cards, based on Coolidge. The K200 storage acceleration cards offer an unprecedented level of performance and programmability, a game changer solution in terms of performance per Watt and per dollar. Kalray K200 cards are easy to configure with the Easy Programming and Open software environment and can be used for networking, storage, security, AI and compute acceleration.

DATASHEET

K200 Storage Acceleration Cards

K200 datasheet box: By integrating K200-LP DPU PCIe cards into data storage servers and storage enclosures, data centers can accelerate their workloads…

TURBOCARD4 Compute Acceleration Cards

TC4 embodies Kalray’s vision in addressing the intricate demands of modern computing workloads. Housing four of the latest generation of Kalray’s DPUs, Coolidge2TM, in a single PCIe card, Kalray’s TC4 is designed to ensure customers can merge classical and AI-based processing technologies and create superior, efficiency-driven systems for the most processing-intensive AI applications. DPUs offer a very complementary architecture to GPUs, allowing for the processing of a large number of different operations in parallel in an asynchronous way. DPUs are well-suited for pre-processing data that is later used by GPUs or in the context of complex intelligent systems running many different algorithms in parallel.

PRESS RELEASE

TURBOCARD4 Compute Acceleration Cards

TC4 embodies Kalray’s vision in addressing the intricate demands of modern computing workloads …

Features |

Benefits |

|

|---|---|---|

| High performance data-centric processor with real-time processing |  |

Process more data faster |

| Parallel execution of heterogenous multi-processing tasks |  |

Process more data faster |

| Fully programmable with open software environment |  |

Easy to program and integrate |

| High speed interfaces: connected to high-speed fabrics |  |

Leverage state-of-the-art networking technologies |

| Secure islands, encrypt/decrypt, secure boot |  |

Data is secure during processing |

| Enables power efficiency |  |

Process more data per watt |

Kalray Solutions

Machine

Learning

Machine

Vision

5G / Edge

Computing

Automotive

Intelligent Data

Centers

Kalray MPPA® DPU Manycore

A Massively Parallel Processor Array Architecture

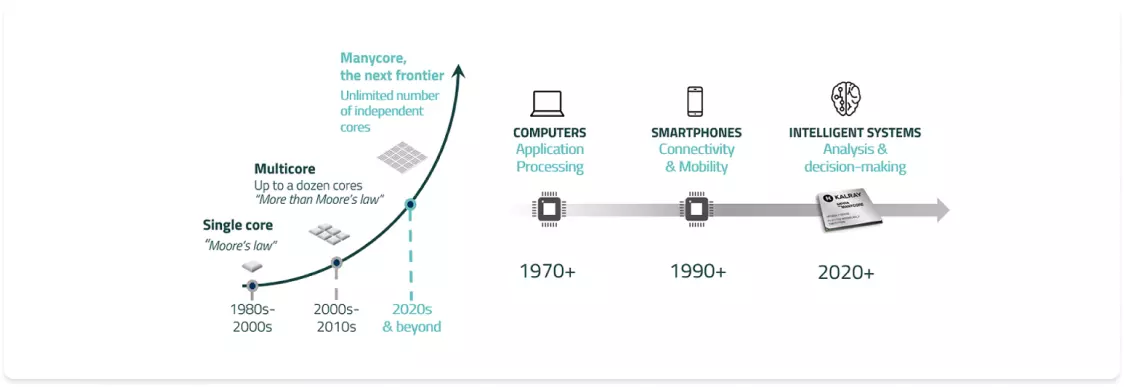

The world is facing an explosion of data which current technologies were not initially designed for and cannot always handle efficiently. The industry needs a new type of processor. Enter the era of Intelligent DPU (DataProcessing Unit) Processors.

The MPPA® DPU’s interconnects are suited to different types of data transfers. The first interconnect is an AXIFabric bus grid, for read/write accesses from cores to memories and peripherals connected by PCIe. The second interconnect is an RDMANoC(Network-on-Chip), that supports data transfers to or from the Ethernet network interfaces and connect all clusters together.

The overall architecture of the Kalray 3rd generation of MPPA® DPU (Data Processing Unit) processor aka Coolidge™ is based on a “Massively Parallel Processor Array” architecture, which is characterized by the association of computing clusters connected to each other, to the external memory and to the I/O interfaces via two independent interconnects.

The robust partitioning necessary for safe operation of the processor is carried out at the granularity of the computing cluster and is based on the configuration of memory management units (MMUs), memory protection units (MPUs), and on the deactivation or not of network on chip links.

Start Your Journey

WHITE PAPER

A new generation of processor is needed to support the “explosion of data” we’re experiencing today